Abstract

Large Vision-Language Models (LVLMs) such as MiniGPT-4 and LLaVA have demonstrated the capability of understanding images and achieved remarkable performance in various visual tasks. Despite their strong abilities in recognizing common objects due to extensive training datasets, they lack specific domain knowledge and have a weaker understanding of localized details within objects, which hinders their effectiveness in the Industrial Anomaly Detection (IAD) task. On the other hand, most existing IAD methods only provide anomaly scores and necessitate the manual setting of thresholds to distinguish between normal and abnormal samples, which restricts their practical implementation. In this paper, we explore the utilization of LVLM to address the IAD problem and propose AnomalyGPT, a novel IAD approach based on LVLM. We generate training data by simulating anomalous images and producing corresponding textual descriptions for each image. We also employ an image decoder to provide fine-grained semantic and design a prompt learner to fine-tune the LVLM using prompt embeddings. Our AnomalyGPT eliminates the need for manual threshold adjustments, thus directly assesses the presence and locations of anomalies. Additionally, AnomalyGPT supports multi-turn dialogues and exhibits impressive few-shot in-context learning capabilities. With only one normal shot, AnomalyGPT achieves the state-of-the-art performance with an accuracy of 86.1%, an image-level AUC of 94.1%, and a pixel-level AUC of 95.3% on the MVTec-AD dataset.

Video Presentation

Model

AnomalyGPT is the first Large Vision-Language Model (LVLM) based Industrial Anomaly Detection (IAD) method that can detect anomalies in industrial images without the need for manually specified thresholds. Existing IAD methods can only provide anomaly scores and need manually threshold setting, while existing LVLMs cannot detect anomalies in the image. AnomalyGPT can not only indicate the presence and location of anomaly but also provide information about the image.

Comparison between AnomalyGPT and existing methods.

We leverage a pre-trained image encoder and a Large Language Model (LLM) to align IAD images and their corresponding textual descriptions via simulated anomaly data. We employ a lightweight, visual-textual feature-matching-based image decoder to obtain localization result, and design a prompt learner to provide fine-grained semantic to LLM and fine-tune the LVLM using prompt embeddings. Our method can also detect anomalies for previously unseen items with few normal sample provided.

The architecture of AnomalyGPT.

Capabilities

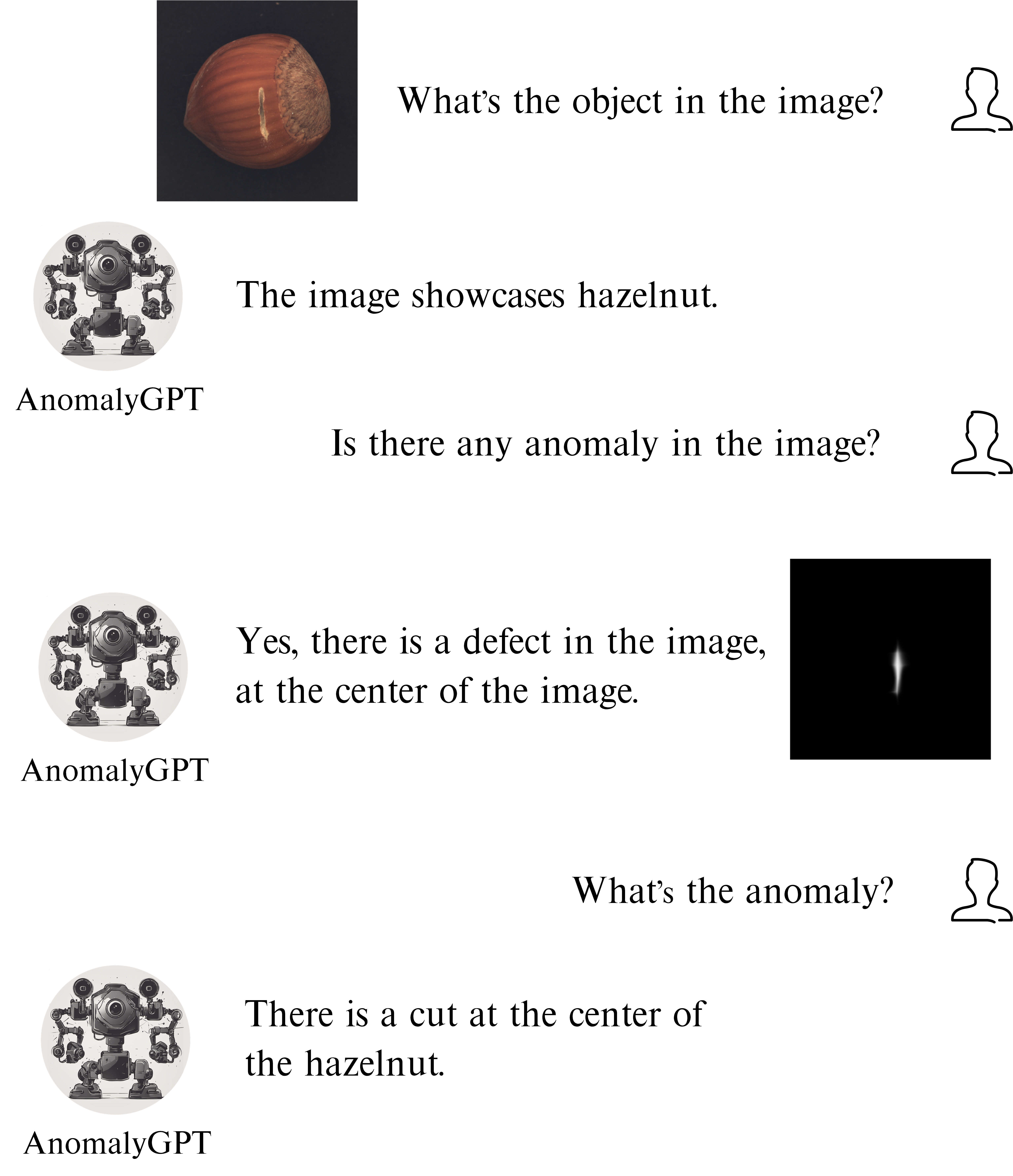

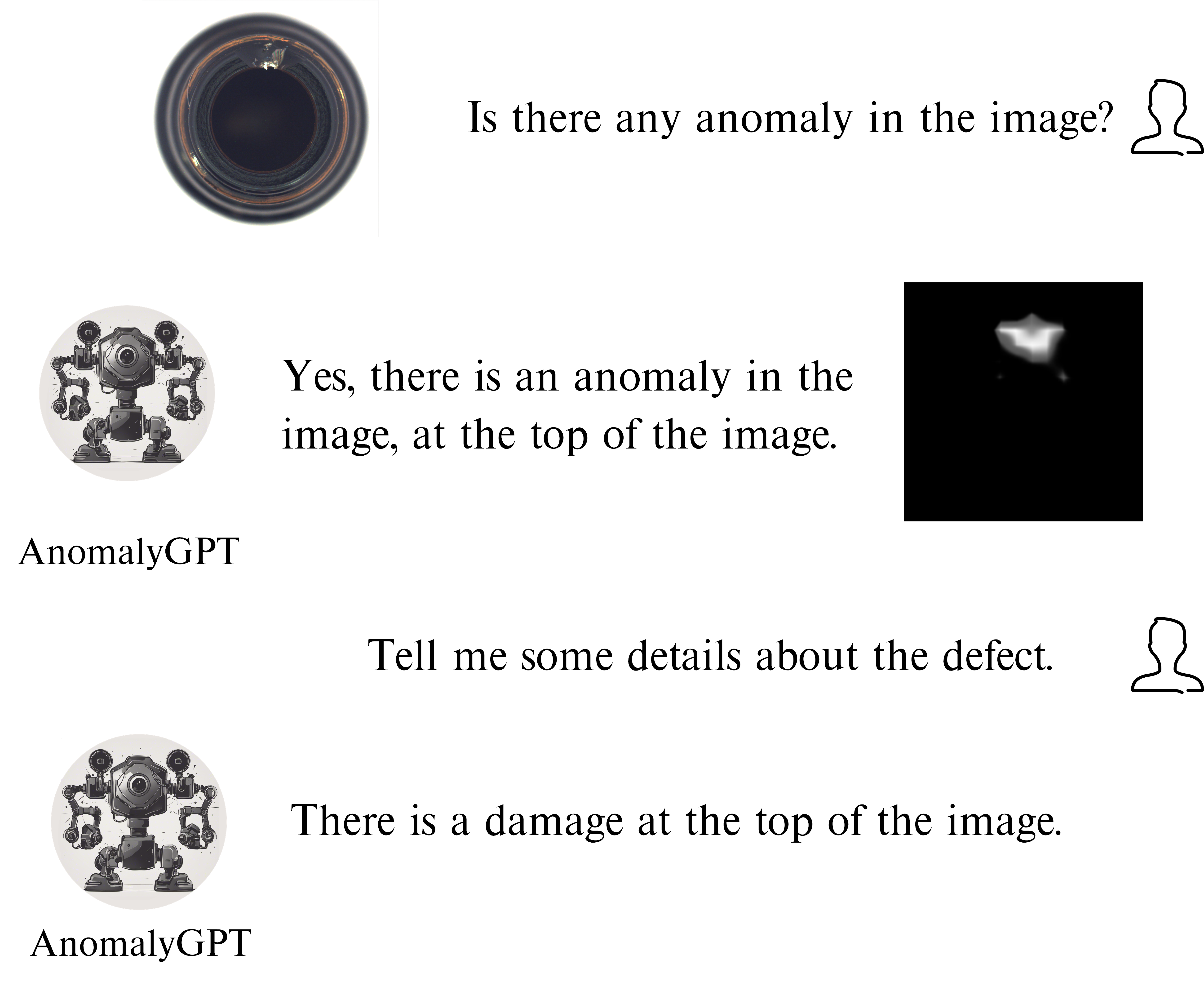

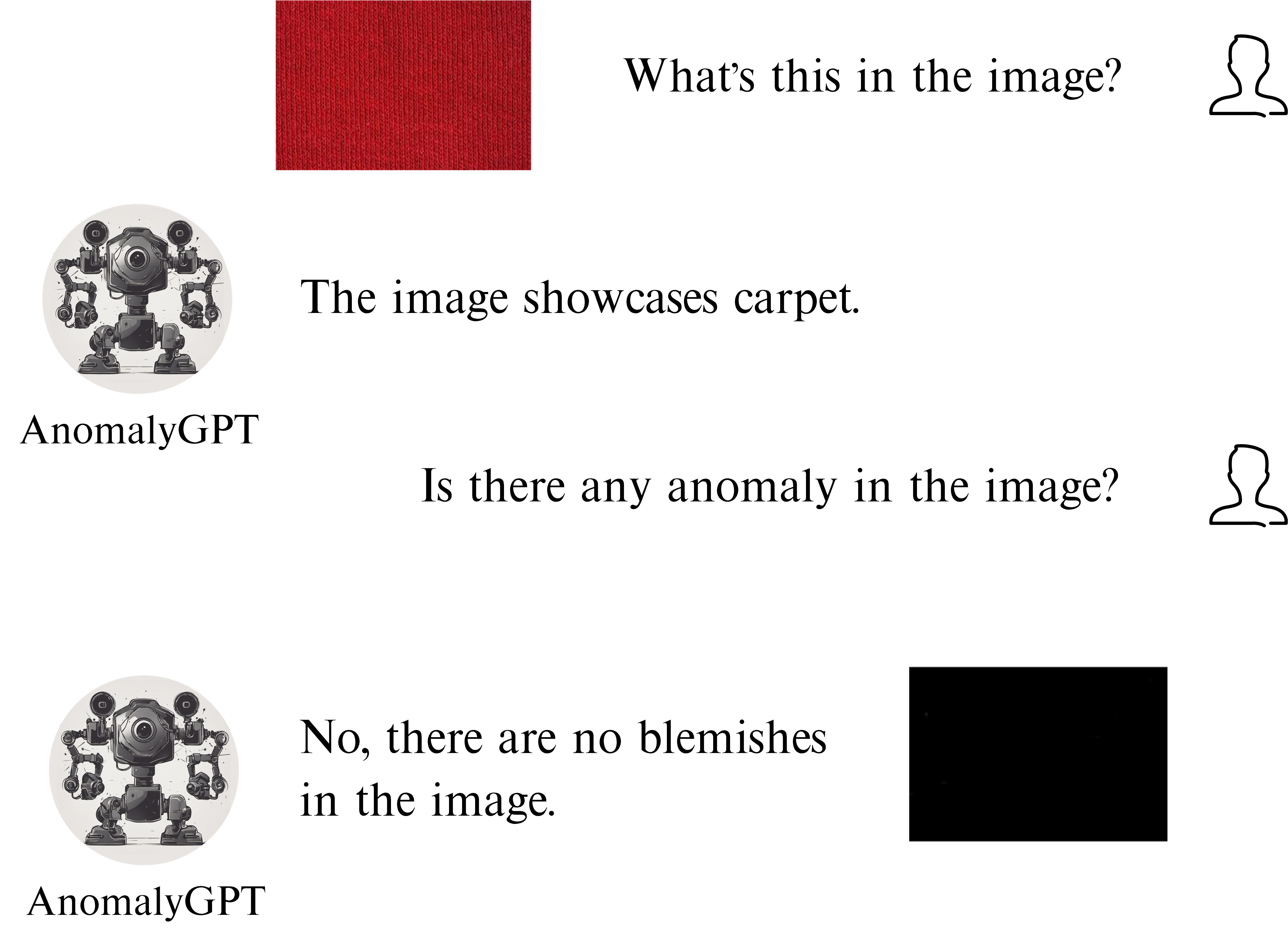

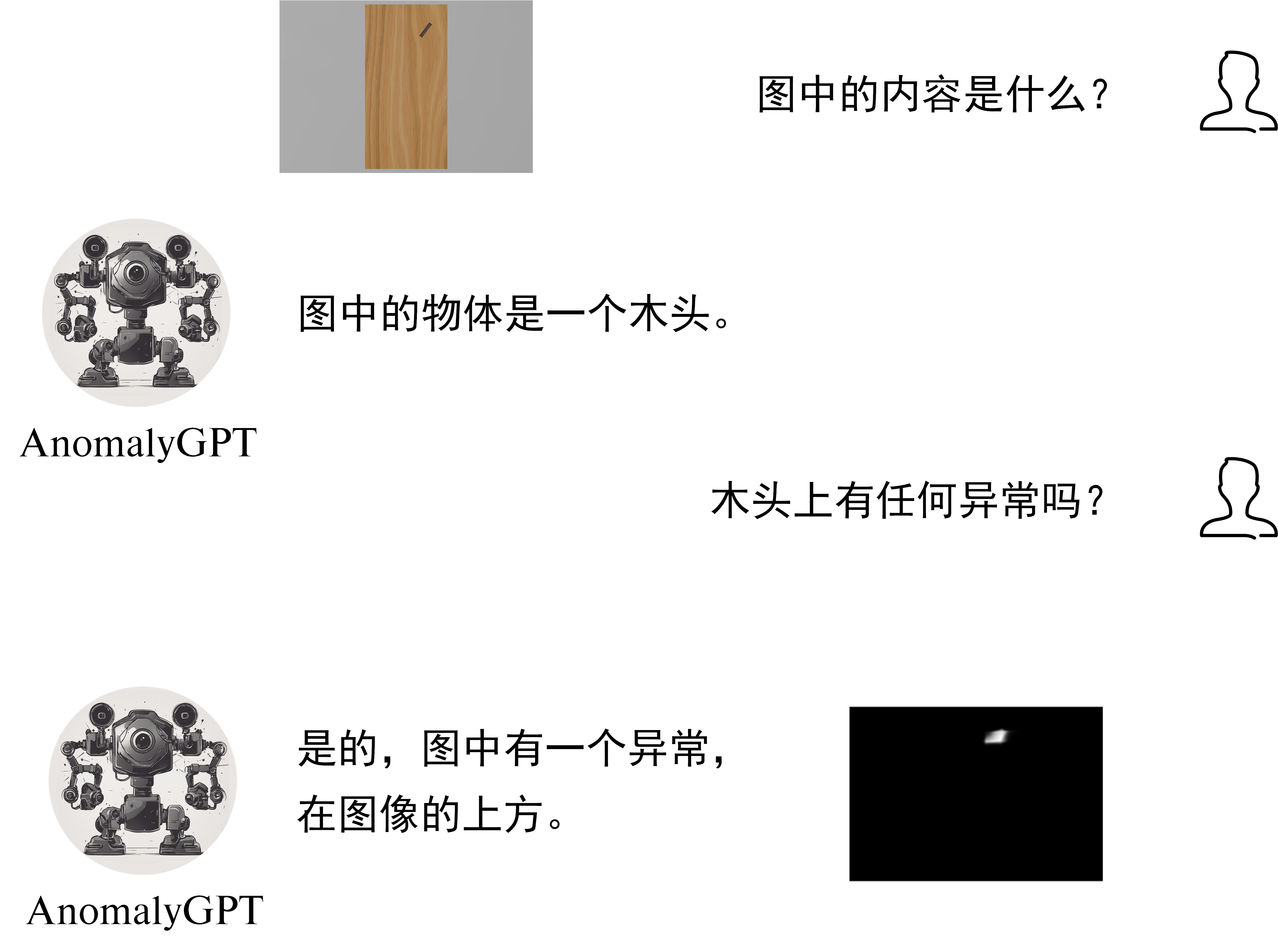

Compared to existing IAD methods that can only provide anomaly scores and existing LVLMs that cannot detect anomalies in industrial images. AnomalyGPT can not only directly indicate the presence and location of anomaly without manually threshold setting but also provide information about the image. We find that the capabilities of AnomalyGPT include but are not limited to (with examples attached in the bottom of this page):

- indicating whether an image contains anomalies.

- pinpointing the locations of anomalies.

- engaging in multi-turn dialogues related to the image.

- conversing in English and Chinese.

- .... (explore our demo on your own!)

BibTeX

@article{gu2023anomalygpt,

title={AnomalyGPT: Detecting Industrial Anomalies using Large Vision-Language Models},

author={Gu, Zhaopeng and Zhu, Bingke and Zhu, Guibo and Chen, Yingying and Tang, Ming and Wang, Jinqiao},

journal={arXiv preprint arXiv:2308.15366},

year={2023}

}

Acknowledgement

This website template is borrowed from the MiniGPT-4 project, which is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Examples

An image of concrete with crack.

A crack capsule.

An image of a cut hazelnut.

A damaged bottle.

A photo of normal carpet.

A photo of a piece of wood with defect.

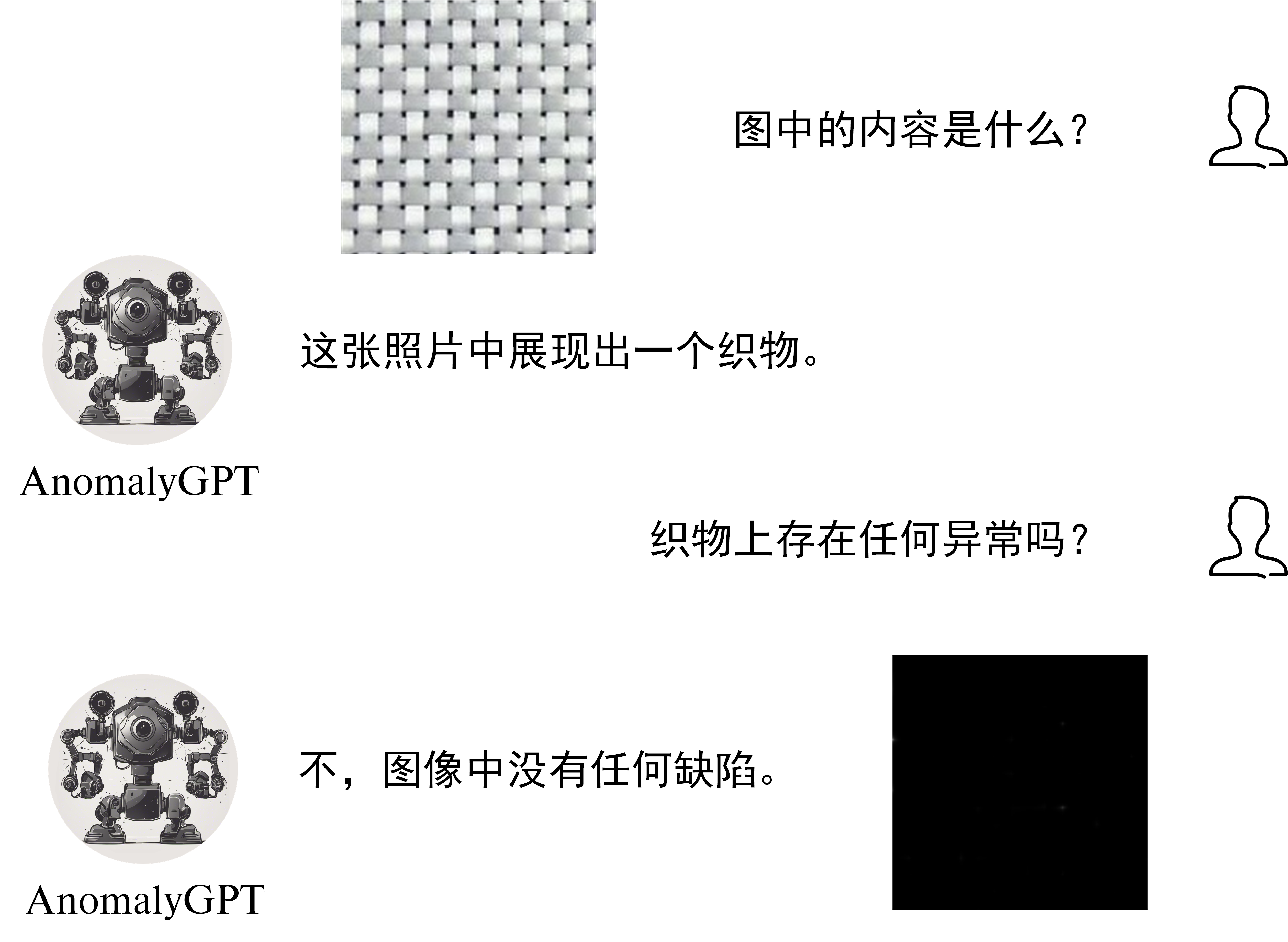

A piece of normal fabric.